In practically any discipline, it's a good idea to have others evaluate your work with fresh eyes, and this is especially true in user experience and web design. Otherwise, your partiality for your own work can skew your perception of it. Learning directly from the people that your work is actually for — your users — is what enables you to craft the best user experience possible.

UX and design professionals leverage usability testing to get user feedback on their product or website’s user experience all the time. In this post, you'll learn:

What usability testing is

- Its purpose and goals

- Scenarios where it can work

- Real-life examples and case studies

- How to conduct one of your own

- Scripted questions you can use along the way

What is usability testing?

Usability testing is a method of evaluating a product or website’s user experience. By testing the usability of their product or website with a representative group of their users or customers, UX researchers can determine if their actual users can easily and intuitively use their product or website.

UX researchers will usually conduct usability studies on each iteration of their product from its early development to its release.

During a usability study, the moderator asks participants in their individual user session to complete a series of tasks while the rest of the team observes and takes notes. By watching their actual users navigate their product or website and listening to their praises and concerns about it, they can see when the participants can quickly and successfully complete tasks and where they’re enjoying the user experience, encountering problems, and experiencing confusion.

After conducting their study, they’ll analyze the results and report any interesting insights to the project lead.

What is the purpose of usability testing?

Usability testing allows researchers to uncover any problems with their product's user experience, decide how to fix these problems, and ultimately determine if the product is usable enough.

Identifying and fixing these early issues saves the company both time and money: Developers don’t have to overhaul the code of a poorly designed product that’s already built, and the product team is more likely to release it on schedule.

Benefits of Usability Testing

Usability testing has five major advantages over the other methods of examining a product's user experience (such as questionnaires or surveys):

- Usability testing provides an unbiased, accurate, and direct examination of your product or website’s user experience. By testing its usability on a sample of actual users who are detached from the amount of emotional investment your team has put into creating and designing the product or website, their feedback can resolve most of your team’s internal debates.

- Usability testing is convenient. To conduct your study, all you have to do is find a quiet room and bring in portable recording equipment. If you don’t have recording equipment, someone on your team can just take notes.

- Usability testing can tell you what your users do on your site or product and why they take these actions.

- Usability testing lets you address your product’s or website’s issues before you spend a ton of money creating something that ends up having a poor design.

- For your business, intuitive design boosts customer usage and their results, driving demand for your product.

Usability Testing Scenario Examples

Usability testing sounds great in theory, but what value does it provide in practice? Here's what it can do to actually make a difference for your product:

1. Identify points of friction in the usability of your product.

As Brian Halligan said at INBOUND 2019, "Dollars flow where friction is low." This just as true in UX as it is in sales or customer service. The more friction your product has, the more reason your users will have to find something that's easier to use.

Usability testing can uncover points of friction from customer feedback.

For example: "My process begins in Google Drive. I keep switching between windows and making multiple clicks just to copy and paste from Drive into this interface."

Even though the product team may have had that task in mind when they created the tool, seeing it in action and hearing the user's frustration uncovered a use case that the tool didn't compensate for. It might lead the team to solve for this problem by creating an easy import feature or way to access Drive within the interface to reduce the number of clicks the user needs to make to accomplish their task.

2. Stress test across many environments and use cases.

Our products don't exist in a vacuum, and sometimes development environments are unable to compensate for all the variables. Getting the product out and tested by users can uncover bugs that you may not have noticed while testing internally.

For example: "The check boxes disappear when I click on them."

Let's say that the team investigates why this might be, and they discover that the user is on a browser that's not commonly used (or a browser version that's outdated).

If the developers only tested across the browsers used in-house, they may have missed this bug, and it could have resulted in customer frustration.

3. Provide diverse perspectives from your user base.

While individuals in our customer bases have a lot in common (in particular, the things that led them to need and use our products), each individual is unique and brings a different perspective to the table. These perspectives are invaluable in uncovering issues that may not have occurred to your team.

For example: "I can't find where I'm supposed to click."

Upon further investigation, it's possible that this feedback came from a user who is color blind, leading your team to realize that the color choices did not create enough contrast for this user to navigate properly.

Insights from diverse perspectives can lead to design, architectural, copy, and accessibility improvements.

4. Give you clear insights into your product's strengths and weaknesses.

You likely have competitors in your industry whose products are better than yours in some areas and worse than yours in others. These variations in the market lead to competitive differences and opportunities. User feedback can help you close the gap on critical issues and identify what positioning is working.

For example: "This interface is so much easier to use and more attractive than [competitor product]. I just wish that I could also do [task] with it."

Two scenarios are possible based on that feedback:

- Your product can already accomplish the task the user wants. You just have to make it clear that the feature exists by improving copy or navigation.

- You have a really good opportunity to incorporate such a feature in future iterations of the product.

5. Inspire you with potential future additions or enhancements.

Speaking of future iterations, that comes to the next example of how usability testing can make a difference for your product: The feedback that you gather can inspire future improvements to your tool.

It's not just about rooting out issues but also envisioning where you can go next that will make the most difference for your customers. And who best to ask but your prospective and current customers themselves?

Usability Testing Examples & Case Studies

Now that you have an idea of the scenarios in which usability testing can help, here are some real-life examples of it in action:

1. User Fountain + Satchel

Satchel is a developer of education software, and their goal was to improve the experience of the site for their users. Consulting agency User Fountain conducted a usability test focusing on one question: "If you were interested in Satchel's product, how would you progress with getting more information about the product and its pricing?"

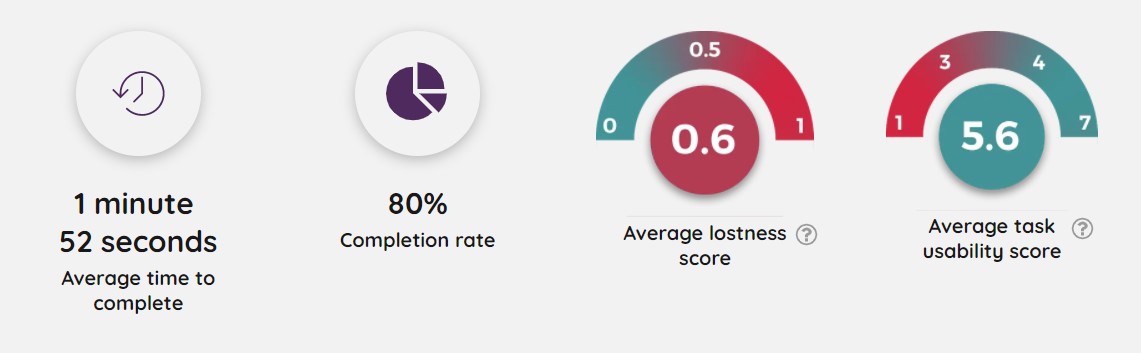

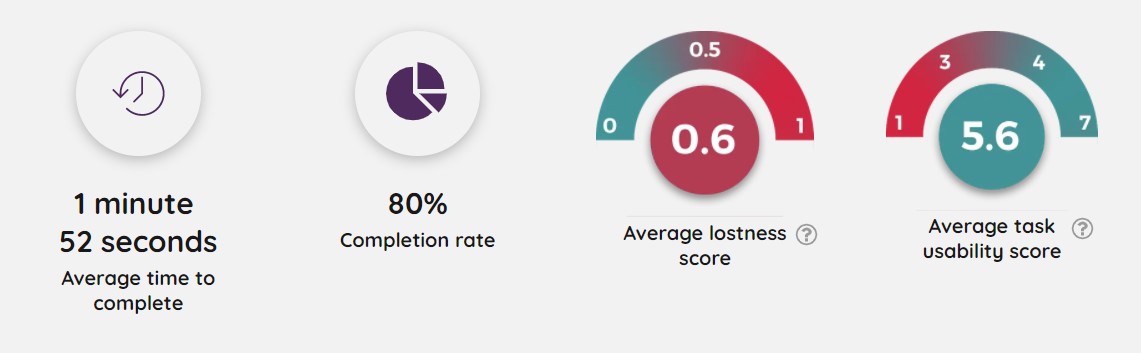

During the test, User Fountain noted significant frustration as users attempted to complete the task, particularly when it came to locating pricing information. Only 80% of users were successful.

This led User Fountain to create the hypothesis that a "Get Pricing" link would make the process clearer for users. From there, they tested a new variation with such a link against a control version. The variant won, resulting in a 34% increase in demo requests.

By testing a hypothesis based on real feedback, friction was eliminated for the user, bringing real value to Satchel.

2. Kylie.Design + Digi-Key

Ecommerce site Digi-Key approached consultant Kylie.Design to uncover which site interactions had the highest success rates and what features those interactions had in common.

They conducted more than 120 tests and recorded:

- Click paths from each user

- Which actions were most common

- The success rates for each

This as well as the written and verbal feedback provided by participants informed the new design, which resulted in increasing purchaser success rates from 68.2% to 83.3%.

In essence, Digi-Key was able to identify their most successful features and double-down on them, improving the experience and their bottom line.

3. Sparkbox + An Academic Medical Center

An academic medical center in the midwest partnered with consulting agency Sparkbox to improve the patient experience on their homepage, where some features were suffering from low engagement.

Sparkbox conducted a usability study to determine what users wanted from the homepage and what didn't meet their expectations. From there, they were able to propose solutions to increase engagement.

For example, one key action was the ability to access electronic medical records. The new design based on user feedback increased the success rate from 45% to 94%.

This is a great example of putting the user's pains and desires front-and-center in a design.

The 9 Phases of a Usability Study

1. Decide which part of your product or website you want to test.

Do you have any pressing questions about how your users will interact with certain parts of your design, like a particular interaction or workflow? Or are you wondering what users will do first when they land on your product page? Gather your thoughts about your product or website’s pros, cons, and areas of improvement, so you can create a solid hypothesis for your study.

2. Pick your study’s tasks.

Your participants' tasks should be your user’s most common goals when they interact with your product or website, like making a purchase.

3. Set a standard for success.

Once you know what to test and how to test it, make sure to set clear criteria to determine success for each task. For instance, when I was in a usability study for HubSpot’s Content Strategy tool, I had to add a blog post to a cluster and report exactly what I did. Setting a threshold of success and failure for each task lets you determine if your product's user experience is intuitive enough or not.

4. Write a study plan and script.

At the beginning of your script, you should include the purpose of the study, if you’ll be recording, some background on the product or website, questions to learn about the participants’ current knowledge of the product or website, and, finally, their tasks. To make your study consistent, unbiased, and scientific, moderators should follow the same script in each user session.

5. Delegate roles.

During your usability study, the moderator has to remain neutral, carefully guiding the participants through the tasks while strictly following the script. Whoever on your team is best at staying neutral, not giving into social pressure, and making participants feel comfortable while pushing them to complete the tasks should be your moderator

Note-taking during the study is also just as important. If there’s no recorded data, you can’t extract any insights that’ll prove or disprove your hypothesis. Your team’s most attentive listener should be your note-taker during the study.

6. Find your participants.

Screening and recruiting the right participants is the hardest part of usability testing. Most usability experts suggest you should only test five participants during each study, but your participants should also closely resemble your actual user base. With such a small sample size, it’s hard to replicate your actual user base in your study.

To recruit the ideal participants for your study, create the most detailed and specific persona as you possibly can and incentivize them to participate with a gift card or another monetary reward.

Recruiting colleagues from other departments who would potentially use your product is also another option. But you don’t want any of your team members to know the participants because their personal relationship can create bias -- since they want to be nice to each other, the researcher might help a user complete a task or the user might not want to constructively criticize the researcher’s product design.

7. Conduct the study.

During the actual study, you should ask your participants to complete one task at a time, without your help or guidance. If the participant asks you how to do something, don’t say anything. You want to see how long it takes users to figure out your interface.

Asking participants to “think out loud” is also an effective tactic -- you’ll know what’s going through a user’s head when they interact with your product or website.

After they complete each task, ask for their feedback, like if they expected to see what they just saw, if they would’ve completed the task if it wasn’t a test, if they would recommend your product to a friend, and what they would change about it. This qualitative data can pinpoint more pros and cons of your design.

8. Analyze your data.

You’ll collect a ton of qualitative data after your study. Analyzing it will help you discover patterns of problems, gauge the severity of each usability issue, and provide design recommendations to the engineering team.

When you analyze your data, make sure to pay attention to both the users’ performance and their feelings about the product. It’s not unusual for a participant to quickly and successfully achieve your goal but still feel negatively about the product experience.

9. Report your findings.

After extracting insights from your data, report the main takeaways and lay out the next steps for improving your product or website’s design and the enhancements you expect to see during the next round of testing.

The 3 Most Common Types of Usability Tests

1. Hallway/Guerilla Usability Testing

This is where you set up your study somewhere with a lot of foot traffic. It allows you to ask randomly-selected people who have most likely never even heard of your product or website -- like passers-by -- to evaluate its user-experience.

2. Remote/Unmoderated Usability Testing

Remote/unmoderated usability testing has two main advantages: it uses third-party software to recruit target participants for your study, so you can spend less time recruiting and more time researching. It also allows your participants to interact with your interface by themselves and in their natural environment -- the software can record video and audio of your user completing tasks.

Letting participants interact with your design in their natural environment with no one breathing down their neck can give you more realistic, objective feedback. When you’re in the same room as your participants, it can prompt them to put more effort into completing your tasks since they don’t want to seem incompetent around an expert. Your perceived expertise can also lead to them to please you instead of being honest when you ask for their opinion, skewing your user experience's reactions and feedback.

3. Moderated Usability Testing

Moderated usability testing also has two main advantages: interacting with participants in person or through a video a call lets you ask them to elaborate on their comments if you don’t understand them, which is impossible to do in an unmoderated usability study. You’ll also be able to help your users understand the task and keep them on track if your instructions don’t initially register with them.

Usability Testing Script & Questions

Following one script or even a template of questions for every one of your usability studies wouldn't make any sense -- each study's subject matter is different. You'll need to tailor your questions to the things you want to learn, but most importantly, you'll need to know how to ask good questions.

1. When you [action], what's the first thing you do to [goal]?

Questions such as this one give insight into how users are inclined to interact with the tool and what their natural behavior is.

Julie Fischer, one of HubSpot's Senior UX researchers, gives this advice: "Don't ask leading questions that insert your own bias or opinion into the participants' mind. They'll end up doing what you want them to do instead of what they would do by themselves."

For example, "Find [x]" is a better than "Are you able to easily find [x]?" The latter inserts connotation that may affect how they use the product or answer the question.

2. How satisfied are you with the [attribute] of [feature]?

Avoid leading the participants by asking questions like "Is this feature too complicated?" Instead, gauge their satisfaction on a Likert scale that provides a number range from highly unsatisfied to highly satisfied. This will provide a less biased result than leading them to a negative answer they may not otherwise have had.

3. How do you use [feature]?

There may be multiple ways to achieve the same goal or utilize the same feature. This question will help uncover how users interact with a specific aspect of the product and what they find valuable.

4. What parts of [the product] do you use the most? Why?

This question is meant to help you understand the strengths of the product and what about it creates raving fans. This will indicate what you should absolutely keep and perhaps even lead to insights into what you can improve for other features.

5. What parts of [the product] do you use the least? Why?

This question is meant to uncover the weaknesses of the product or the friction in its use. That way, you can rectify any issues or plan future improvements to close the gap between user expectations and reality.

6. If you could change one thing about [feature] what would it be?

Because it's so similar to #5, you may get some of the same answers. However, you'd be surprised about the aspirational things that your users might say here.

7. What do you expect [action/feature] to do?

Here's another tip from Julie Fischer:

"When participants ask 'What will this do?' it's best to reply with the question 'What do you expect it do?' rather than telling them the answer."

Doing this can uncover user expectation as well as clarity issues with the copy.

Your Work Could Always Use a Fresh Perspective

Letting another person review and possibly criticize your work takes courage -- no one wants a bruised ego. But most of the time, when you allow people to constructively criticize or even rip apart your article or product design, especially when your work is intended to help these people, your final result will be better than you could've ever imagined.

Editor's note: This post was originally published in August 2018 and has been updated for comprehensiveness.

from Marketing https://blog.hubspot.com/marketing/usability-testing

In practically any discipline, it's a good idea to have others evaluate your work with fresh eyes, and this is especially true in user experience and web design. Otherwise, your partiality for your own work can skew your perception of it. Learning directly from the people that your work is actually for — your users — is what enables you to craft the best user experience possible.

UX and design professionals leverage usability testing to get user feedback on their product or website’s user experience all the time. In this post, you'll learn:

What usability testing is

- Its purpose and goals

- Scenarios where it can work

- Real-life examples and case studies

- How to conduct one of your own

- Scripted questions you can use along the way

What is usability testing?

Usability testing is a method of evaluating a product or website’s user experience. By testing the usability of their product or website with a representative group of their users or customers, UX researchers can determine if their actual users can easily and intuitively use their product or website.

UX researchers will usually conduct usability studies on each iteration of their product from its early development to its release.

During a usability study, the moderator asks participants in their individual user session to complete a series of tasks while the rest of the team observes and takes notes. By watching their actual users navigate their product or website and listening to their praises and concerns about it, they can see when the participants can quickly and successfully complete tasks and where they’re enjoying the user experience, encountering problems, and experiencing confusion.

After conducting their study, they’ll analyze the results and report any interesting insights to the project lead.

What is the purpose of usability testing?

Usability testing allows researchers to uncover any problems with their product's user experience, decide how to fix these problems, and ultimately determine if the product is usable enough.

Identifying and fixing these early issues saves the company both time and money: Developers don’t have to overhaul the code of a poorly designed product that’s already built, and the product team is more likely to release it on schedule.

Benefits of Usability Testing

Usability testing has five major advantages over the other methods of examining a product's user experience (such as questionnaires or surveys):

- Usability testing provides an unbiased, accurate, and direct examination of your product or website’s user experience. By testing its usability on a sample of actual users who are detached from the amount of emotional investment your team has put into creating and designing the product or website, their feedback can resolve most of your team’s internal debates.

- Usability testing is convenient. To conduct your study, all you have to do is find a quiet room and bring in portable recording equipment. If you don’t have recording equipment, someone on your team can just take notes.

- Usability testing can tell you what your users do on your site or product and why they take these actions.

- Usability testing lets you address your product’s or website’s issues before you spend a ton of money creating something that ends up having a poor design.

- For your business, intuitive design boosts customer usage and their results, driving demand for your product.

Usability Testing Scenario Examples

Usability testing sounds great in theory, but what value does it provide in practice? Here's what it can do to actually make a difference for your product:

1. Identify points of friction in the usability of your product.

As Brian Halligan said at INBOUND 2019, "Dollars flow where friction is low." This just as true in UX as it is in sales or customer service. The more friction your product has, the more reason your users will have to find something that's easier to use.

Usability testing can uncover points of friction from customer feedback.

For example: "My process begins in Google Drive. I keep switching between windows and making multiple clicks just to copy and paste from Drive into this interface."

Even though the product team may have had that task in mind when they created the tool, seeing it in action and hearing the user's frustration uncovered a use case that the tool didn't compensate for. It might lead the team to solve for this problem by creating an easy import feature or way to access Drive within the interface to reduce the number of clicks the user needs to make to accomplish their task.

2. Stress test across many environments and use cases.

Our products don't exist in a vacuum, and sometimes development environments are unable to compensate for all the variables. Getting the product out and tested by users can uncover bugs that you may not have noticed while testing internally.

For example: "The check boxes disappear when I click on them."

Let's say that the team investigates why this might be, and they discover that the user is on a browser that's not commonly used (or a browser version that's outdated).

If the developers only tested across the browsers used in-house, they may have missed this bug, and it could have resulted in customer frustration.

3. Provide diverse perspectives from your user base.

While individuals in our customer bases have a lot in common (in particular, the things that led them to need and use our products), each individual is unique and brings a different perspective to the table. These perspectives are invaluable in uncovering issues that may not have occurred to your team.

For example: "I can't find where I'm supposed to click."

Upon further investigation, it's possible that this feedback came from a user who is color blind, leading your team to realize that the color choices did not create enough contrast for this user to navigate properly.

Insights from diverse perspectives can lead to design, architectural, copy, and accessibility improvements.

4. Give you clear insights into your product's strengths and weaknesses.

You likely have competitors in your industry whose products are better than yours in some areas and worse than yours in others. These variations in the market lead to competitive differences and opportunities. User feedback can help you close the gap on critical issues and identify what positioning is working.

For example: "This interface is so much easier to use and more attractive than [competitor product]. I just wish that I could also do [task] with it."

Two scenarios are possible based on that feedback:

- Your product can already accomplish the task the user wants. You just have to make it clear that the feature exists by improving copy or navigation.

- You have a really good opportunity to incorporate such a feature in future iterations of the product.

5. Inspire you with potential future additions or enhancements.

Speaking of future iterations, that comes to the next example of how usability testing can make a difference for your product: The feedback that you gather can inspire future improvements to your tool.

It's not just about rooting out issues but also envisioning where you can go next that will make the most difference for your customers. And who best to ask but your prospective and current customers themselves?

Usability Testing Examples & Case Studies

Now that you have an idea of the scenarios in which usability testing can help, here are some real-life examples of it in action:

1. User Fountain + Satchel

Satchel is a developer of education software, and their goal was to improve the experience of the site for their users. Consulting agency User Fountain conducted a usability test focusing on one question: "If you were interested in Satchel's product, how would you progress with getting more information about the product and its pricing?"

During the test, User Fountain noted significant frustration as users attempted to complete the task, particularly when it came to locating pricing information. Only 80% of users were successful.

This led User Fountain to create the hypothesis that a "Get Pricing" link would make the process clearer for users. From there, they tested a new variation with such a link against a control version. The variant won, resulting in a 34% increase in demo requests.

By testing a hypothesis based on real feedback, friction was eliminated for the user, bringing real value to Satchel.

2. Kylie.Design + Digi-Key

Ecommerce site Digi-Key approached consultant Kylie.Design to uncover which site interactions had the highest success rates and what features those interactions had in common.

They conducted more than 120 tests and recorded:

- Click paths from each user

- Which actions were most common

- The success rates for each

This as well as the written and verbal feedback provided by participants informed the new design, which resulted in increasing purchaser success rates from 68.2% to 83.3%.

In essence, Digi-Key was able to identify their most successful features and double-down on them, improving the experience and their bottom line.

3. Sparkbox + An Academic Medical Center

An academic medical center in the midwest partnered with consulting agency Sparkbox to improve the patient experience on their homepage, where some features were suffering from low engagement.

Sparkbox conducted a usability study to determine what users wanted from the homepage and what didn't meet their expectations. From there, they were able to propose solutions to increase engagement.

For example, one key action was the ability to access electronic medical records. The new design based on user feedback increased the success rate from 45% to 94%.

This is a great example of putting the user's pains and desires front-and-center in a design.

The 9 Phases of a Usability Study

1. Decide which part of your product or website you want to test.

Do you have any pressing questions about how your users will interact with certain parts of your design, like a particular interaction or workflow? Or are you wondering what users will do first when they land on your product page? Gather your thoughts about your product or website’s pros, cons, and areas of improvement, so you can create a solid hypothesis for your study.

2. Pick your study’s tasks.

Your participants' tasks should be your user’s most common goals when they interact with your product or website, like making a purchase.

3. Set a standard for success.

Once you know what to test and how to test it, make sure to set clear criteria to determine success for each task. For instance, when I was in a usability study for HubSpot’s Content Strategy tool, I had to add a blog post to a cluster and report exactly what I did. Setting a threshold of success and failure for each task lets you determine if your product's user experience is intuitive enough or not.

4. Write a study plan and script.

At the beginning of your script, you should include the purpose of the study, if you’ll be recording, some background on the product or website, questions to learn about the participants’ current knowledge of the product or website, and, finally, their tasks. To make your study consistent, unbiased, and scientific, moderators should follow the same script in each user session.

5. Delegate roles.

During your usability study, the moderator has to remain neutral, carefully guiding the participants through the tasks while strictly following the script. Whoever on your team is best at staying neutral, not giving into social pressure, and making participants feel comfortable while pushing them to complete the tasks should be your moderator

Note-taking during the study is also just as important. If there’s no recorded data, you can’t extract any insights that’ll prove or disprove your hypothesis. Your team’s most attentive listener should be your note-taker during the study.

6. Find your participants.

Screening and recruiting the right participants is the hardest part of usability testing. Most usability experts suggest you should only test five participants during each study, but your participants should also closely resemble your actual user base. With such a small sample size, it’s hard to replicate your actual user base in your study.

To recruit the ideal participants for your study, create the most detailed and specific persona as you possibly can and incentivize them to participate with a gift card or another monetary reward.

Recruiting colleagues from other departments who would potentially use your product is also another option. But you don’t want any of your team members to know the participants because their personal relationship can create bias -- since they want to be nice to each other, the researcher might help a user complete a task or the user might not want to constructively criticize the researcher’s product design.

7. Conduct the study.

During the actual study, you should ask your participants to complete one task at a time, without your help or guidance. If the participant asks you how to do something, don’t say anything. You want to see how long it takes users to figure out your interface.

Asking participants to “think out loud” is also an effective tactic -- you’ll know what’s going through a user’s head when they interact with your product or website.

After they complete each task, ask for their feedback, like if they expected to see what they just saw, if they would’ve completed the task if it wasn’t a test, if they would recommend your product to a friend, and what they would change about it. This qualitative data can pinpoint more pros and cons of your design.

8. Analyze your data.

You’ll collect a ton of qualitative data after your study. Analyzing it will help you discover patterns of problems, gauge the severity of each usability issue, and provide design recommendations to the engineering team.

When you analyze your data, make sure to pay attention to both the users’ performance and their feelings about the product. It’s not unusual for a participant to quickly and successfully achieve your goal but still feel negatively about the product experience.

9. Report your findings.

After extracting insights from your data, report the main takeaways and lay out the next steps for improving your product or website’s design and the enhancements you expect to see during the next round of testing.

The 3 Most Common Types of Usability Tests

1. Hallway/Guerilla Usability Testing

This is where you set up your study somewhere with a lot of foot traffic. It allows you to ask randomly-selected people who have most likely never even heard of your product or website -- like passers-by -- to evaluate its user-experience.

2. Remote/Unmoderated Usability Testing

Remote/unmoderated usability testing has two main advantages: it uses third-party software to recruit target participants for your study, so you can spend less time recruiting and more time researching. It also allows your participants to interact with your interface by themselves and in their natural environment -- the software can record video and audio of your user completing tasks.

Letting participants interact with your design in their natural environment with no one breathing down their neck can give you more realistic, objective feedback. When you’re in the same room as your participants, it can prompt them to put more effort into completing your tasks since they don’t want to seem incompetent around an expert. Your perceived expertise can also lead to them to please you instead of being honest when you ask for their opinion, skewing your user experience's reactions and feedback.

3. Moderated Usability Testing

Moderated usability testing also has two main advantages: interacting with participants in person or through a video a call lets you ask them to elaborate on their comments if you don’t understand them, which is impossible to do in an unmoderated usability study. You’ll also be able to help your users understand the task and keep them on track if your instructions don’t initially register with them.

Usability Testing Script & Questions

Following one script or even a template of questions for every one of your usability studies wouldn't make any sense -- each study's subject matter is different. You'll need to tailor your questions to the things you want to learn, but most importantly, you'll need to know how to ask good questions.

1. When you [action], what's the first thing you do to [goal]?

Questions such as this one give insight into how users are inclined to interact with the tool and what their natural behavior is.

Julie Fischer, one of HubSpot's Senior UX researchers, gives this advice: "Don't ask leading questions that insert your own bias or opinion into the participants' mind. They'll end up doing what you want them to do instead of what they would do by themselves."

For example, "Find [x]" is a better than "Are you able to easily find [x]?" The latter inserts connotation that may affect how they use the product or answer the question.

2. How satisfied are you with the [attribute] of [feature]?

Avoid leading the participants by asking questions like "Is this feature too complicated?" Instead, gauge their satisfaction on a Likert scale that provides a number range from highly unsatisfied to highly satisfied. This will provide a less biased result than leading them to a negative answer they may not otherwise have had.

3. How do you use [feature]?

There may be multiple ways to achieve the same goal or utilize the same feature. This question will help uncover how users interact with a specific aspect of the product and what they find valuable.

4. What parts of [the product] do you use the most? Why?

This question is meant to help you understand the strengths of the product and what about it creates raving fans. This will indicate what you should absolutely keep and perhaps even lead to insights into what you can improve for other features.

5. What parts of [the product] do you use the least? Why?

This question is meant to uncover the weaknesses of the product or the friction in its use. That way, you can rectify any issues or plan future improvements to close the gap between user expectations and reality.

6. If you could change one thing about [feature] what would it be?

Because it's so similar to #5, you may get some of the same answers. However, you'd be surprised about the aspirational things that your users might say here.

7. What do you expect [action/feature] to do?

Here's another tip from Julie Fischer:

"When participants ask 'What will this do?' it's best to reply with the question 'What do you expect it do?' rather than telling them the answer."

Doing this can uncover user expectation as well as clarity issues with the copy.

Your Work Could Always Use a Fresh Perspective

Letting another person review and possibly criticize your work takes courage -- no one wants a bruised ego. But most of the time, when you allow people to constructively criticize or even rip apart your article or product design, especially when your work is intended to help these people, your final result will be better than you could've ever imagined.

Editor's note: This post was originally published in August 2018 and has been updated for comprehensiveness.

No hay comentarios:

Publicar un comentario